Table of Contents

Why Crawlability Is the Foundation of SEO in 2025 (And How to Fix It)

Imagine writing amazing content—but Google can’t find it. That’s what happens when crawlability is poor. Crawlability refers to how easily search engines can access and navigate your website. If crawlers can’t reach or process your pages, they’ll never appear in search results—no matter how good your content is.

In this 2025 guide, we’ll explain how crawling works, what crawl budget means, and how to audit and fix crawlability issues. Based on the latest insights from Search Engine Land, Google, and industry leaders, this post shows you how to build a site that crawlers and users love.

1. What Crawlability Really Is

Search engines like Google use bots (Googlebot, Bingbot) to crawl the web—discovering pages, following internal links, and noting structure. If a page can’t be crawled, it doesn’t exist in a search engine’s eyes.

Crawlability ensures bots can:

Access your sitemap

Follow internal links

Respect robots.txt and meta rules

Visit important pages within a few clicks from the homepage

Without crawlability, even great pages remain invisible and unindexed. This makes it the first layer of your technical SEO pyramid.

2. Crawl Budget: Giving Priority to What Matters

Even crawlable sites have limits. Google assigns each site a “crawl budget”—a cap on how many pages it will crawl in a given timeframe.

If crawlers waste time on low-value pages (like paginated archives or old forms), your important content may not get crawled or indexed. For larger sites, managing this budget efficiently is essential.

3. Crawlability vs. Indexability

Crawlability: Can the bot access the page?

Indexability: Once accessed, should the page be added to the search index?

They’re related, but separate: crawlability without indexability is wasted effort.

For example, “noindex” tags prevent indexing but don’t block crawlingsearchengineland.compureseo.com+8artemis.marketing+8searchengineland.com+8, while robots.txt blocks crawling entirely.

4. Essential Crawlability Best Practices

– XML Sitemap

A sitemap guides bots to your key pages—especially those buried deep. Most CMS platforms (WordPress with Yoast or RankMath) generate one automatically.

Tip: Submit it in Google Search Console and include it in robots.txt.

– Clean Internal Linking

Every important page should be accessible in ≤3 clicks and have at least one internal link. A well-structured site helps crawlers map your content efficiently.

– robots.txt & Meta Rules

Use robots.txt to block irrelevant areas (e.g., staging). Ensure you’re not accidentally blocking vital pages. Use noindex tags for pages you want crawled but not shown in search.

– Avoid Duplicate Content

Duplicate pages waste crawl budget and cause confusion. Consolidate them, use canonical tags, or remove them entirely.

– Fix Crawl Errors

Use tools like Google Search Console and Screaming Frog to catch 4xx/5xx errors, redirects, and broken links. Fix promptly to keep bots navigating smoothly.

– Speed & Performance

Fast-loading pages are crawled more often. Optimize server responses, compress images, and improve Core Web Vitals to help both users and bots .

5. Advanced Optimization for Crawl Efficiency

1. Crawl Budget Optimization

Exclude low-value pages (filters, old posts, tag archives) from crawling with robots.txt or meta directives.

2. Use Log File Analysis

Tools like Screaming Frog and Semrush can highlight which pages bots crawl most—or not at all .

3. Implement Schema Markup

Although not directly affecting crawlability, structured data helps search engines understand your pages faster.

4. Optimize for JavaScript

While Google handles JS, plain HTML links and avoiding render-blocking scripts ensure better, faster crawling.

6. How to Audit Crawlability—Step by Step

Submit your sitemap in Google Search Console

Run URL Inspection on key pages

Crawl your site with Screaming Frog, noting errors and orphan pages

Check robots.txt in browser (

yourdomain.com/robots.txt)Test load times with PageSpeed Insights

Review log files to see crawl frequency

7. When Crawlability Impacts Rankings

Even with great content, poor crawlability can undercut your SEO:

Missing content in SERPs

Google changing canonicals unexpectedly

Delayed indexing of new pages

Search interest not converting into traffic

By fixing foundational issues, you unlock the potential of your content strategy—moving other marketing efforts from unseen to searchable.

Final Takeaways: Crawlability Is Your SEO Foundation

| Element | Why It’s Essential | How to Ensure It Works |

|---|---|---|

| Crawlability | Ensures search engines can find your pages | Use sitemap, internal links, and robots.txt correctly |

| Crawl Budget | Prevents wasting bot visits on low-value URLs | Block irrelevant pages and optimize site structure |

| Indexability | Makes pages eligible for search listings | Apply or remove noindex, fix canonical issues |

| Technical Health | Supports both speed and bot access | Audit load times, fix errors, resolve duplicates |

Our Blog

Digital Marketing Insights

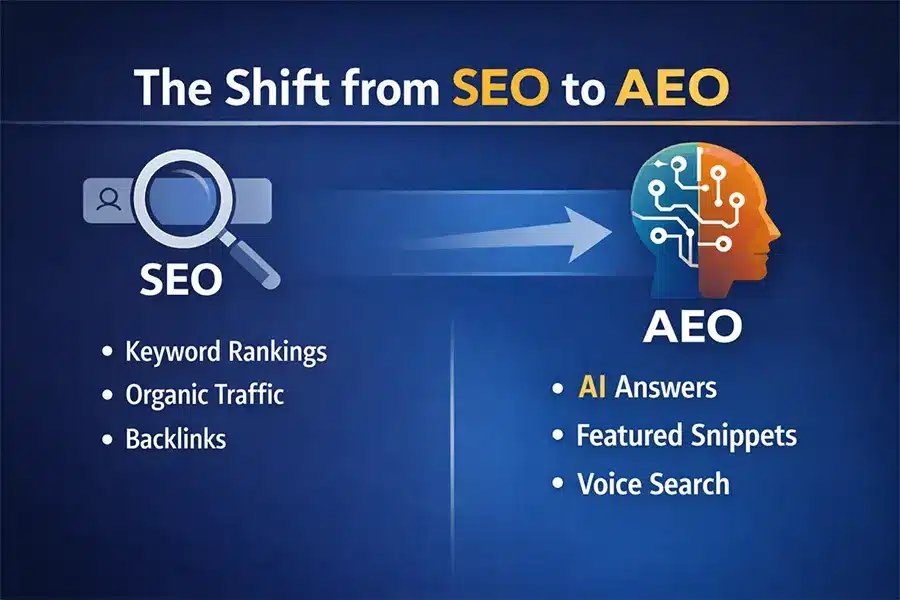

Why SEO Is Shifting — Answer Engine Optimization (AEO) Is the Next Frontier

Why SEO Is Shifting — Answer Engine Optimization (AEO) Is the Next Frontier January 21, 2026 SEO Table of Contents The Evolution of Search Is Happening Now Search...

Why Stopping Your Blog Could Hurt Your Business — And How to Recover

Why Stopping Your Blog Could Hurt Your Business — And How to Recover December 8, 2025 SEO Table of Contents The Surprising Power of Blogging — Why It...

A Simple Breakdown of the 4 Types of SEO Every Business Owner Should Know

A Simple Breakdown of the 4 Types of SEO Every Business Owner Should Know December 5, 2025 Web Design Table of Contents Why Understanding the 4 Types of...

Ready to take the next step?

Contact us today to see how we can make digital marketing work for your business.